For teams seeking a more efficient way to deploy, test, and scale applications without managing underlying infrastructure, the Serverless Framework provides a practical solution. The framework streamlines the development and deployment of cloud applications by allowing you to define the entire application architecture such as APIs, databases, functions, and containers in a single configuration file. Applications can be deployed with one command, which enables scalable and event-driven workloads across AWS and other cloud providers without the need to manage servers directly.

This article discusses the Serverless Framework and Serverless Containers, including when each approach is appropriate, how cloud environments can be replicated locally, and why these frameworks are increasingly used for building scalable and efficient cloud-native applications.

Serverless key benefits

Serverless team also provides the Serverless Containers Framework, which is intended for teams that deploy container-based workloads on services like AWS Lambda and AWS Fargate. Together, these tools allow development teams to use the most suitable compute model for each workload, whether function-based or containerized, while maintaining a consistent development experience.

A major advantage of Serverless is the ability to replicate cloud environments locally. This improves developer productivity and helps reduce cloud service costs during the development process. Beyond this capability, the Serverless Framework offers several features that support an efficient and predictable workflow:

- Speed: Deploy complex architectures in minutes instead of days.

- Scalability: Automatically adjust resources based on demand.

- Cost Efficiency: Pay only for actual usage, with no charges for idle servers.

- Infrastructure as Code (IaC): Store infrastructure definitions in version control to ensure consistency and repeatability.

Basic project structure

A typical Serverless project that targets AWS includes the following components.

- Compute

– Functions: AWS Lambda functions (or equivalents on other cloud platforms) that are triggered by events such as HTTP requests, S3 object uploads, SQS messages, or scheduled executions.

– Containers: Using the Serverless Containers Framework, teams can define and deploy container-based workloads. This approach allows workloads to run either as containers on AWS Lambda or on AWS ECS Fargate, all defined within a single configuration file. - Events

Events are the triggers that connect compute components to cloud resources. These may include interactions with databases, storage services, message queues, and other event sources. Events are declared directly within the project’s configuration. - Resources

Resources refer to the cloud infrastructure components that the application depends on, such as databases, queues, storage buckets, or networking elements.

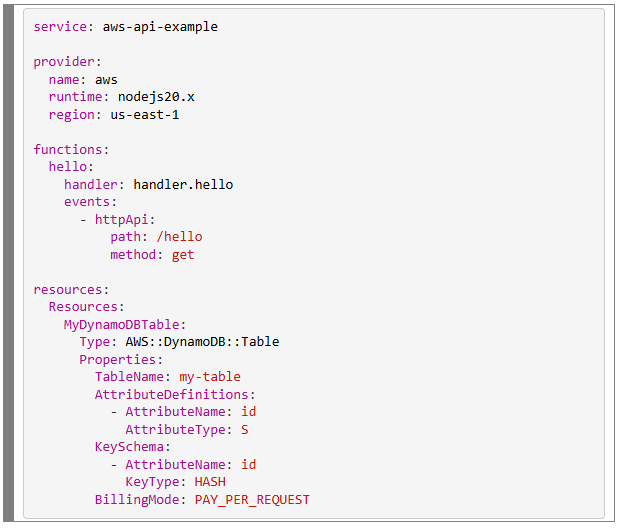

Below is a simple example of a Serverless Framework YAML configuration file for an API:

In many cases, resources do not need to be defined explicitly for the Serverless Framework to create them. For example, if a function is triggered by an SQS queue, the framework can provision that queue automatically based on the configuration. This reduces repetitive setup work while still allowing developers to customize or explicitly define resources when required.

The Serverless Framework enables teams to define key infrastructure components directly within the project configuration. Common AWS resources that can be provisioned through the framework include:

- DynamoDB tables for NoSQL data storage

- S3 buckets for file and object storage

- SQS queues for message processing and decoupling

- SNS topics for publish and subscribe communication

- API Gateway for API management

- IAM roles and policies for access control

- CloudWatch alarms for monitoring and alerting

- VPC configurations for networking and security

Cloud agnostic capability

With the release of Serverless Framework V4, official support for non-AWS cloud providers has been deprecated. This includes earlier integrations with providers such as Azure and Google Cloud.

This change may affect teams with existing multi-cloud architectures, but it also signals a shift in how the framework intends to support alternative cloud environments in the future. Instead of maintaining legacy implementations, the Serverless team is focusing on establishing a foundation for a more modern, reliable, and scalable approach to multi-cloud support. This new approach is expected to be introduced in upcoming releases.

Advanced IaC capabilities

The Serverless Framework enhances its Infrastructure as Code capabilities through the following features:

- State management: The framework tracks infrastructure state through the Serverless Dashboard or local state files. This supports safe incremental updates and reduces the risk of unintended changes.

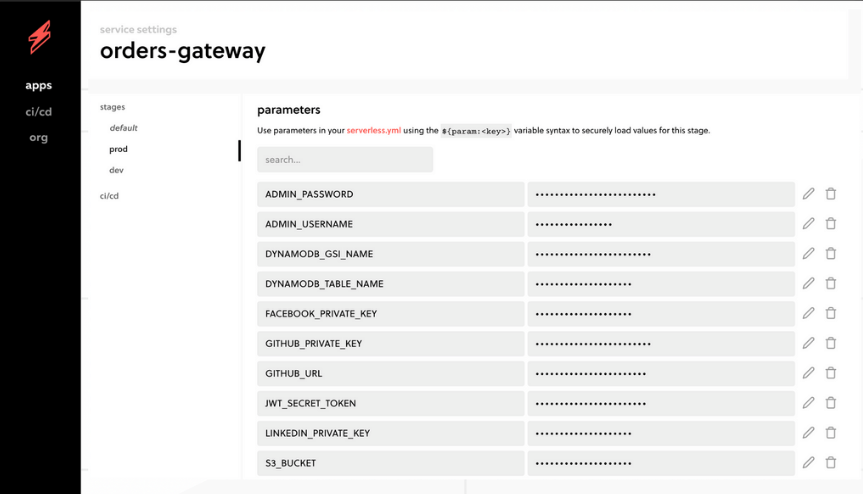

- Environment variables and secrets: It offers flexible configuration options for different environments and includes secure mechanisms for managing sensitive values.

- Custom IAM roles: Teams can define fine-grained permissions for individual functions, which supports the principle of least privilege and improves security control.

- Plugin ecosystem: The framework supports a wide range of plugins that enable modular and maintainable configurations. This includes the use of variables, file references, and shared components to reduce duplication.

- Nested stacks: Large applications can be broken down into smaller and more manageable units, improving maintainability and enabling clearer separation of concerns.

Serverless Containers Framework

Although the Serverless Framework is primarily designed for function-based workloads, many modern applications require container-based execution. Examples include long-running APIs, background processing, or services that maintain internal state.

To support these scenarios, the Serverless team has introduced the Serverless Containers Framework (SCF). This framework provides a unified way to deploy containers on serverless platforms such as AWS Lambda Containers and AWS ECS Fargate. It offers the same declarative, configuration-driven workflow as the Serverless Framework, but is tailored to containerized workloads.

Below is a summary of the key capabilities of SCF:

Unified container development and deployment

- Deploy containers to AWS Lambda and AWS Fargate using a single workflow.

- Combine Lambda and Fargate compute models within the same API.

- Switch between platforms without requiring code changes or causing service interruptions.

- Provision production-ready infrastructure automatically, including VPC settings, networking configurations, and Application Load Balancer setup.

Rich development experience

- Build Lambda and Fargate containers efficiently with full local emulation.

- Route and simulate AWS Application Load Balancer traffic through a local environment.

- Improve development speed with instant hot reloading.

- Inject live AWS IAM roles into containers during development to mirror production settings.

Production-ready features

- Detect code and configuration changes to enable safe and efficient deployments.

- Support one or multiple custom domains within the same API.

- Manage SSL certificates automatically.

- Apply secure default IAM and networking configurations.

- Load environment variables from sources such as

.envfiles, AWS Systems Manager Parameter Store, AWS Secrets Manager, and Terraform state files. - Multi-cloud support is planned for future releases.

Configuration

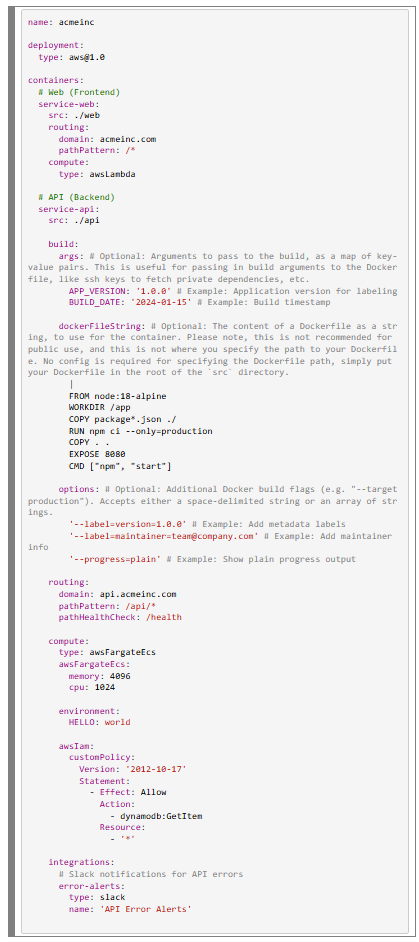

The Serverless Containers Framework uses a straightforward YAML configuration model to define container-based architectures. This configuration is stored in the serverless.containers.yml file. Even complex application setups can be described in a concise and structured way.

Below is a simple example of how a full-stack application can be defined using this configuration approach:

In the Serverless Containers Framework (SCF), there are three primary options for providing a Docker image for an API.

- Inline Docker definition

You can specify a Dockerfile directly withinserverless.containers.ymlby using thedockerFileStringproperty. This can be useful for simple cases, but it is not recommended for production environments due to limited maintainability. - Referencing a Dockerfile in the source directory

A standard Dockerfile can be placed in the application’s source directory and referenced through thesrcproperty in the YAML configuration. SCF automatically detects the file and uses it during image creation. - Automatic image generation

If no Dockerfile is provided, SCF can build an image automatically based on the project’s runtime. For example, Node.js projects are detected throughpackage.jsonand Python projects throughrequirements.txt. This allows the application to run without manually creating a Dockerfile. Automatic image generation is currently limited to these runtimes, but SCF can support any language when a valid Dockerfile is supplied.

This flexibility allows development teams to choose between a fully customized container setup or SCF’s built-in runtime images for a faster development workflow.

When to use which

Depending on the workload, you will choose between the Serverless Framework, which is focused on function-based execution and simplifies deployment, and the Serverless Containers Framework, which provides greater flexibility by allowing workloads to run in containers.

| Scenario | Use Serverless Framework | Use Serverless Containers Framework |

|---|---|---|

| Short, event-driven functions | X | |

| Long-running processes | X | |

| High-memory or CPU tasks | X | |

| Mix of both | X | X |

| Gradual migration from traditional containers | X | |

| Lightweight APIs or event handlers | X |

Serverless dashboard

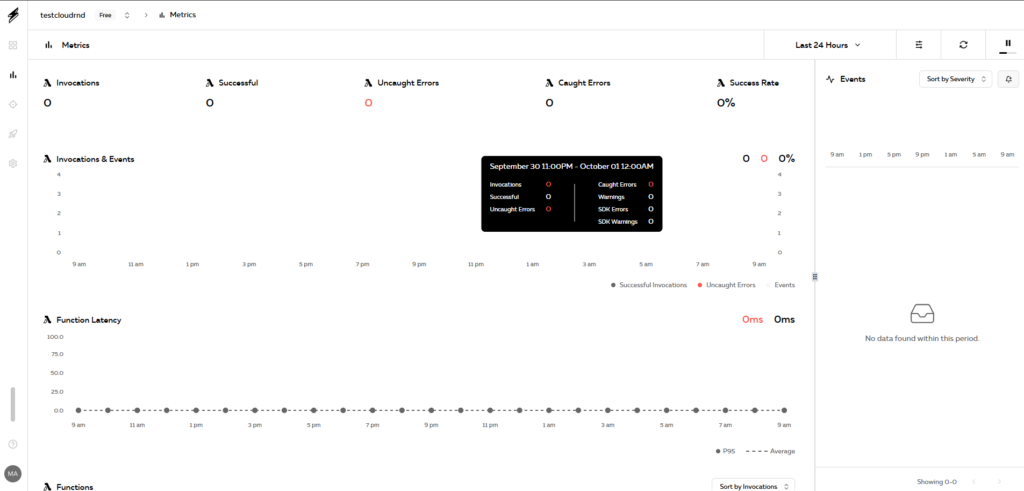

The Serverless Dashboard provides a centralized environment for building, managing, and monitoring serverless applications. It is designed to help teams maintain clarity, governance, and consistency across their serverless workloads.

The web-based interface presents cloud data in a structured and accessible way. It offers developers, operators, and product teams a shared space to track deployments, observe application behavior, review security settings, and collaborate effectively.

Real-time monitoring

The dashboard includes real-time monitoring capabilities that allow teams to track metrics such as function invocations, execution duration, and error rates. These insights are available without requiring manual configuration of CloudWatch queries.

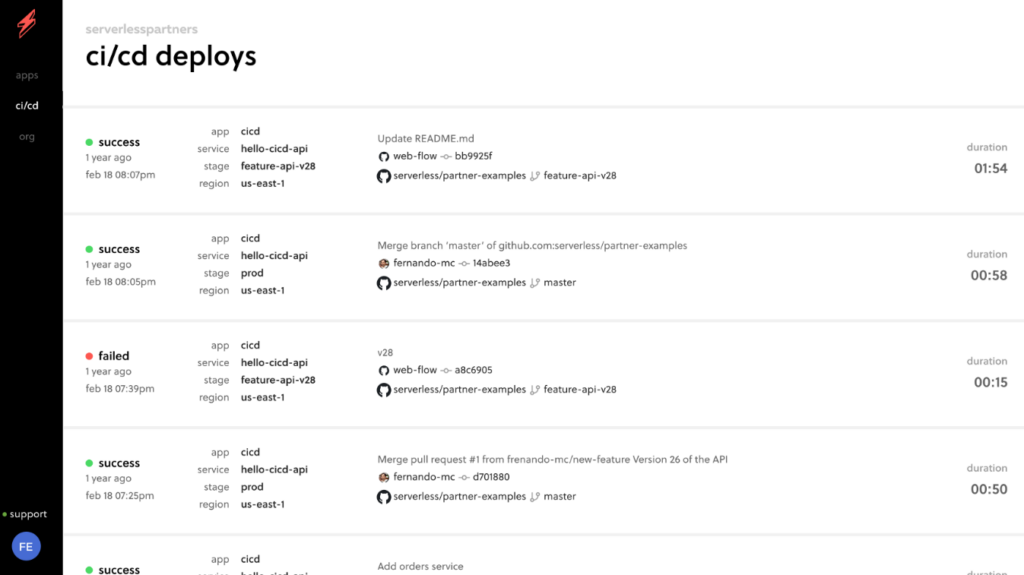

CI/CD integrations

The Serverless dashboard supports integration with platforms such as GitHub, GitLab, and Bitbucket. These integrations enable automated deployments as part of a continuous integration and continuous delivery (CI/CD) workflow.

Secrets management

Secrets management features allow teams to control access to sensitive values, review deployment history, and coordinate configuration changes in a shared environment.

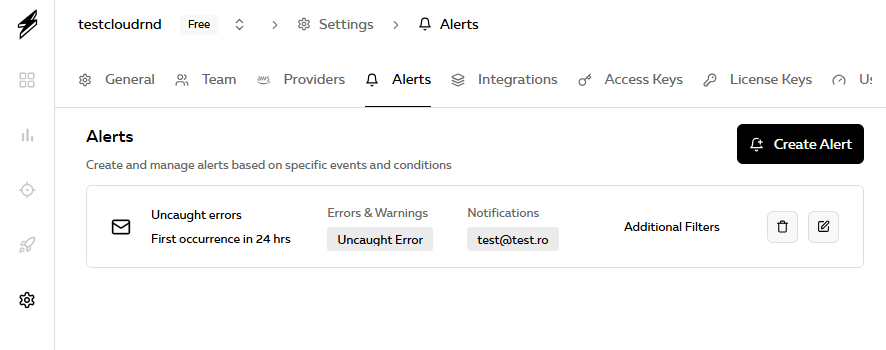

Alerts and notifications

Alerts and notifications help teams stay informed about errors, performance concerns, and unexpected usage patterns. This supports faster investigation and response during operation.

The dashboard integrates closely with the Serverless CLI, allowing developers to manage deployments from the terminal while still accessing detailed monitoring and configuration information through the web interface.

Pricing

- Pay as you go: You pay only for the credits consumed each month. There are no long-term commitments, and usage can be increased or reduced as needed.

- Reserved credits: Credits can be purchased in advance at a discounted rate, which is suitable for teams with predictable usage patterns.

Credit value breakdown

- Service instances: A service instance corresponds to a

serverless.ymlfile that is deployed within a specific stage and region for more than ten days in a month. Each service instance consumes 1 credit. - Traces: A trace represents a single AWS Lambda invocation captured by the Serverless Dashboard, including associated errors, spans, and logs. Traces are used for troubleshooting in the Trace Explorer and Alerts. One credit covers 50,000 traces.

- Metrics: Metrics reflect performance data for AWS Lambda invocations. Each invocation generates four metrics. One credit covers 4 million metrics.

Local development and testing

Local development tools play an important role in building serverless applications efficiently. The Serverless Framework provides several plugins that emulate cloud services on a local machine, which reduces dependency on cloud deployments during development. The Serverless Containers Framework includes its own built-in local emulation for container-based workloads.

- Serverless Offline:

serverless-offlinesimulates AWS Lambda and API Gateway locally. It allows developers to invoke functions through HTTP requests and supports rapid testing, hot reloading, and multiple runtimes such as Node.js, Python, Java, and Go. - Serverless DynamoDB:

serverless-dynamodbprovides a local DynamoDB instance for development and testing without connecting to AWS. It supports Java-based and Docker-based setups and integrates withserverless-offlineto create a more complete local environment. - Serverless S3 Local:

serverless-s3-localemulates S3 buckets locally, enabling teams to test file uploads, downloads, and bucket configurations. It includes support for CORS, HTTPS, and configurable server options. - Serverless Offline SQS:

serverless-offline-sqsallows developers to simulate AWS SQS queues using ElasticMQ. This enables event-driven testing of Lambda functions triggered by queue messages without requiring access to AWS. - Serverless Offline SNS:

serverless-offline-snsprovides local emulation of AWS SNS topics. It allows publishing messages and triggering Lambda functions locally, with support for automatic topic creation and customizable endpoints. - Serverless LocalStack:

serverless-localstackintegrates the Serverless Framework with LocalStack, a comprehensive local emulator for AWS services. It supports local versions of services such as Lambda, S3, and DynamoDB, enabling a testing environment that closely resembles production. LocalStack also offers optimizations such as mounting local Lambda code for faster development iterations.

Conclusion

The Serverless ecosystem provides a structured and efficient approach to building, testing, and deploying modern cloud applications. By supporting infrastructure as code and offering tools for local emulation, it helps teams reduce development time, lower operational costs, and implement scalable architectures without the need to manage servers directly.

Both the Serverless Framework and the Serverless Containers Framework are useful options depending on the workload. Together, they offer a flexible foundation for teams that want to adopt serverless principles, build new applications, or modernize existing ones.